https://www.youtube.com/playlist?list=PLxde5XJWZRbR5pmLiMRp771cvvfQoxAhvIn public data centers and in computational science, open-source software plays a key role to create a productive environment for researchers.

Computational science is the modeling and simulation of the laws of nature within computer systems that offer a well-defined environment for experimental investigation. Models for climate, protein folding, or nanomaterials, for example, can be simulated and manipulated at will without being restricted by the laws of nature, and scientists no longer have to conduct time-consuming and error-prone experiments in the real world. This method leads to new observations and understandings of phenomena that would otherwise be too fast or too slow to comprehend in vitro. The processing of observational data like sensor networks, satellites, and other data-driven workflows is yet another challenge as it usually dominated by the input/output of data.

Complex climate and weather simulations can have 100.000 to million lines of codes and must be maintained and developed further for a decade at least. Therefore, scientific software is mostly open-source, particularly for large scale simulations and bleeding-edge research in a scientific domain.

This month we’ll be hosting our evening of talks online. You can join remotely from the comfort of your own home to listen to the speakers and chat in realtime with the other attendees.

AGENDA

18:15 – Join online meeting to chat with other participants

18:30 – Short introduction (5 min) of the evening by Julian Kunkel — Slides

18:35 – Presentations

20:35 – Close

We were live streaming via GoToWebinar and recording the talks for later posting on YouTube.

The videos are now published on YouTube and slides are linked below.

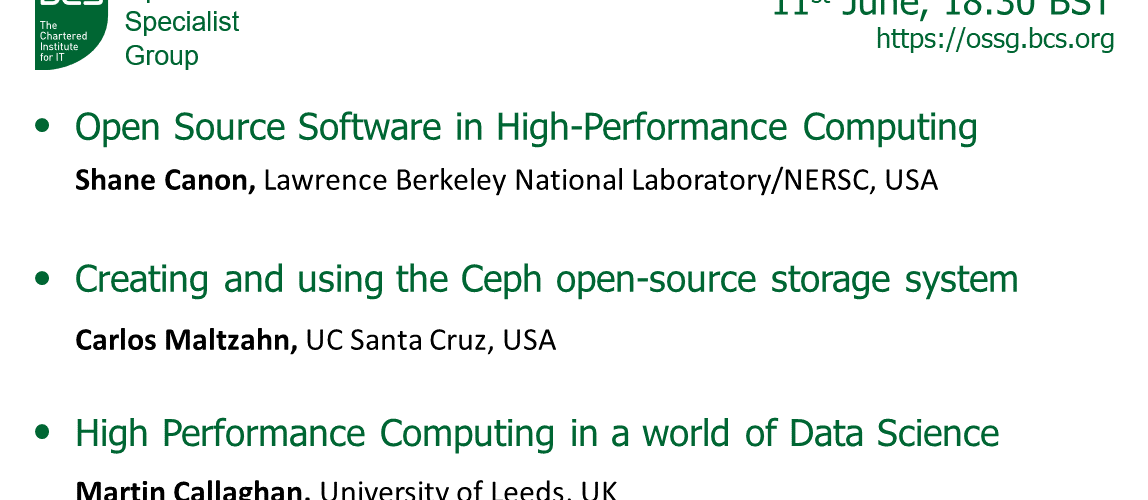

Open Source Software in High-Performance Computing

Shane Canon, Lawrence Berkeley National Laboratory/NERSC, USA

High-Performance Scientific Computing is heavily dependent on a rich ecosystem of open-source software. The HPC community is both a consumer and a contributor to the broader open-source community. In this presentation, we will review the evolution of open-source software use in HPC, give examples of how the HPC community has contributed to its growth, and the future direction of open-source in HPC.

Some lessons learned from creating and using the Ceph open-source storage system

High Performance Computing in a world of Data Science

Martin Callaghan, University of Leeds, UK

Universities and other research organisations have developed and used High Performance Computing (HPC) systems for a number of years to support problems solving across many computational domains including Computational Fluid Dynamics and Molecular Dynamics. Their design features such as a batch processing system and a fast interconnect make them ideal to support these often highly parallel tools and applications.

In recent years though, with the increased interest in Data Science across a number of research fields, HPC has found itself in the position of having to support quite different tools and methodologies.

In this talk, I’ll discuss the design journey we have taken for our institutional HPC, some of the Open Source projects, tools and techniques we use with our research colleagues to support Data Science problems and some of our plans for the future.

Martin Callaghan Research Computing Manager and lead the Research Software Engineering team at the University of Leeds in the UK, where we provide High Performance Computing (HPC), Programming and Software Development consultancy across a diverse research community. My role involves Research Software Engineering, training, consultancy and outreach.

He also manages a comprehensive HPC and Research Computing training programme designed to be a ‘zero to hero’ structured introduction to HPC, Cloud and research software development.

Before joining the University of Leeds, he worked as an Engineer designing machine tool control systems, a teacher and ran my own training and consultancy business. Personal research interests are in text analytics, particularly using neural networks to summarise text at scale.

Note: Please aim to connect at the latest by 18:25 as the event will start at 18:30 prompt.